Table of Contents

SmartSLAM

SmartSLAM is an open source C++ library for SLAM (Simultaneous Localization and Mapping) algorithms in robotics based on probabilistic methods. Please note that since 2013, SmartSLAM is no longer maintained. Download SmartSLAM from SourceForge. Key publication:

- Siegfried Hochdorfer. “Contributions to robustness and resource awareness in life-long SLAM”. University of Ulm, Ulm, 2011. LINK

Bearing Only Slam

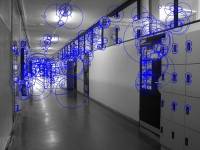

Bearing-Only SLAM is particularly suited to match the requirements of service robots since these can be used with cheap sensors like omnidirectional cameras. As long as no calibrated systems are needed, omnidirectional cameras are cheap and small and thus suitable for service robots. Although omnidirectional cameras provide feature rich information on the surrounding of the robot with high update rates, they do not provide range information.

Thus, one has to modify the sensor models of the well-known SLAM approaches such that observation angles of landmarks are suffcient to generate pose estimates. The problem is that one needs several observations of the same landmark from different poses to intersect the lines of sights. Thus, one has to solve the so-called problem of a delayed initialization of a landmark [Bai03]. The problem results from the fact that the estimates of the observation poses of not yet initialized landmarks have to be corrected with each re-observation of an already known landmark. Thus, one extends the state vector such that it not only contains the robot pose and the initialized landmark poses but also the observation poses of not yet initialized landmarks.

Now these observation poses are consistently updated by the SLAM mechanism. Since the measurements are relative to the observation poses and since these are updated consistently, one can consistently transform angular observations into line of sights in the global frame of reference even if the measurements are from different points in time. Thus, a deferred but consistent evaluation of measurements is possible. Measurements of a possible landmark can then be accumulated over time until suffcient information is available for a reliable landmark initialization.

Installation of SmartSLAM

Install dependences

- openCV 2.x

- player (recent stable version) only if player wrapper is wanted

- smartSoft only if smartSoft wrapper is wanted

- mrpt (0.7.1) http://mrpt.org/

Get SmartSLAM from http://sourceforge.net/projects/smartslam/:

svn co https://smartslam.svn.sourceforge.net/svnroot/smartslam smartSlam

Build SmartSLAM using Eclipse(CDT)

To build the SmartSLAM application, an eclipse project file is included.

There are three different build targets:

- Simulation wrapper ⇒ Uses files from previous runs as data input

- SmartSoft wrapper ⇒ Uses the SmartSoft framework and the smartPioneerBaseServer, smartImageSever as data in-/output. The smartSoft wrapper is delivered in the main SVN repository of smartSoft, available via sourceforge!

- Player wrapper ⇒ Uses player as data input.

For evaluation purposes we recommend using the simulation wrapper together with an ready to use dataset which is available under (take a look at manual contained in the dataset file!): http://sourceforge.net/projects/smartslam/files/

To use the algorithm on a real robot we recommend using the smartSoft wrapper and the SmartSoft framework!

Import SmartSlam into eclipse:

- Open a recent version of eclipse, containing the CDT plug-in.

- Select “Import” in the “File” menu.

- Select “Existing Project into Workspace”

- Select the “smartslam/trunk/BearingOnlySLAM” directory, that has been previously checked out.

- You should now get “BearingOnlySLAM” as Project to import in the list.

- confirm with “Finish”.

If everything worked all right so far, there should be the BearingOnlySLAM project in the Navigator of eclipse.

Now you are ready to build the application. Before hand it might be necessary to select the build configuration depending on the target you want to build:

- Click right on the “BearingOnlySLAM” project in the Navigator, and select “Build Configurations”, “Set active” and BUILD_CONFIGURATION.

- Click right on the “BearingOnlySLAM” project in the Navigator, select “Build Project”.

If everything worked all right there should new be a directory with an executable matching the build configuration you choose, within the BearingOnlySlam checkout.

Configure SmartSLAM

configure SmartSLAM using the *BearingOnlySlam.ini files, depending on the wanted target: Special care has to be taken for those values containing an absolute path.

Run SmartSLAM

To start the application execute the compiled algorithm with the previously configured ini file as parameter:

simulated_Release/simulatedBearingOnlySlam simulationWrapper/simulatedBearingOnlySlam.ini

An instruction how to run the BearingOnlySlam application in an simulated mode with files based data source, is available together with an complete dataset under: http://sourceforge.net/projects/smartslam/files/

6DoF Feature-Based SLAM

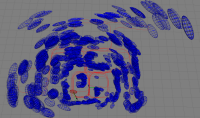

The 6DoF Feature-Based SLAM approach belongs to the category of full observable SLAM approaches. Such full observable SLAM systems provide with a single measurement enough information to compute the full state. The 6DoF Feature-Based SLAM system use odometry and inclinometer data to predict the robot pose and 3D landmark measurements to update the robot pose and landmark positions. The 3D landmarks can be extracted from ToF camera, stereo vision or kinect data.

Installation

Install Dependencies

- MRPT 0.9.4 from www.mrpt.org

- OpenCV 2.2 from code.opencv.org

- Octomap (Revision >= 158) from octomap.sourceforge.net

- Point Cloud Library pointclouds.org if 3D obstacle mapping is wanted.

Instructions

Get SmartSLAM from http://sourceforge.net/projects/smartslam/:

svn co https://smartslam.svn.sourceforge.net/svnroot/smartslam smartSlam

If you want to use the algorithm on a real robot there are additional Dependencies. We use for our real world experiments a Pioneer 3DX a XSense IMU and a ToF Camera from PMD Tec (CamCube 2).

- PMD SDK2 for Linux:

It is available on the driver CD from CamCube2. The PMD SDK 2 has to be installed into the system as described in the PMD install document. Don't forget to copy the include files also into a directory where it can be found from the compiler (eg. /usr/local/include/).

- XSensSDK:

It is available on the driver CD. Copy the include folder of the library in the folder <3DSLAM>/XSenseSDK/ Do the same for the lib folder. Copy it into the folder <3DSLAM>/XSenseSDK/. Now the XSenseSDK folder in our project directory contains the lib and the include folder.

To be able to use the PMD CamCube as user (not as root) you have to define a udev rule by creating a rules file in the folder /etc/udev/rules.d. After plugging in the USB cable, this rule change the group of the device to “users” and change the permission to 766. Now every user from the user group “users” can use the PMD CamCube. There are 2 further entries in the rule file. One for the XSens IMU and one for the Pioneer Robot. This entries are neccessary that the application the name of the devices (xsens for the XSense IMU and pioneerRobot for the Pioneer 3DX)

# ZAFH HS-Ulm Rules

# PMD CamCube 2

ATTRS{idVendor}=="1c28",ATTRS{idProduct}=="c003", GROUP="users", MODE="0766", SYMLINK+="pmdCamCube2"

# XSens IMU

KERNEL=="ttyUSB*", ATTRS{idVendor}=="0403", ATTRS{idProduct}=="d38b", GROUP="users", SYMLINK+="xsens"

# Pioneer Robot

KERNEL=="ttyUSB*", ATTRS{idVendor}=="067b", ATTRS{idProduct}=="2303", SYMLINK+="pioneerRobot", GROUP="users", MODE="0766"

Build Feature-Based 6DoF SLAM

To build the offline version of the 6DoF Feature-Based SLAM application, run “make” in the sub-folder simulationApp_Release

To build the online 6DoF Feature-Based SLAM application for a real robot, run “make” in the sub-folder experimentApp_Release

Publications

Matthias Lutz, Siegfried Hochdorfer, Christian Schlegel. Global Localization using Multiple Hypothesis Tracking: A real-world Approach. Proc. IEEE Int. Conf. on Technologies for Practical Robot Applications (TePRA), Pages 127-132, 2011, Woburn, Massachusetts, USA, ISBN 978-1-61284-480-0.

Siegfried Hochdorfer, Christian Schlegel. 6 DoF SLAM using a ToF Camera: The challenge of a continuously growing number of landmarks. The 2010 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2010), October 18-22, 2010, Taipei, Taiwan.

Siegfried Hochdorfer, Christian Schlegel. Landmark Rating and Selection: Considering the Observability Regions. The 11th Int. Conf. on Intelligent Autonomous Systems (IAS-11), 30. Aug. - 01. Sept. 2010, Ottawa, Canada.

Manuel Wopfner, Jonas Brich, Siegfried Hochdorfer, Christian Schlegel. “Mobile Manipulation in Service Robotics: Scene and Object Recognition with Manipulator-Mounted Laser Ranger”. In “ISR / ROBOTIK 2010”, Munich, 7.-9. June, 2010.

Siegfried Hochdorfer, Matthias Lutz and Christian Schlegel. Bearing-Only SLAM in everyday environments using Omnidirectional Vision. “IEEE ICRA 2010 Workshop on Omnidirectional Robot Vision”, Anchorage, Alaska, USA, 7. May, 2010.

Siegfried Hochdorfer, Matthias Lutz, Christian Schlegel. “Lifelong Localization of a Mobile Service-robot in Everyday Indoor Environment Using Omnidirectional Vision”. “In Proc. IEEE Int. Conf. on Technologies for Practical Robot Applications (TePRA)”, Woburn, Massachusetts, USA, 2009.

Siegfried Hochdorfer, Christian Schlegel. “Landmark rating and selection according to localization coverage: Addressing the challenge of lifelong operation of SLAM in service robots”. “In Proc. IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS)”, St. Louis, Missouri, USA, 2009.

Siegfried Hochdorfer, Christian Schlegel. “Towards a robust Visual SLAM Approach: Addressing the Challenge of life-long Operation”. “In Proc. 14th Int. Conf. on Advanced Robotics (ICAR)”, Munich, 2009.

Christian Schlegel, Siegfried Hochdorfer. “Localization and Mapping for Service Robots: Bearing-Only SLAM with an Omnicam. In Advances in Service Robotics”. “IN-TECH”, 2008 (see link).

Siegfried Hochdorfer, Christian Schlegel. “Bearing-Only SLAM with an Omnicam: Robust Selection of SIFT Features for Service Robots”. “In 20. Fachgespräch Autonome Mobile Systeme (AMS)”, Seiten 8-14. Informatik aktuell, Springer, Kaiserslautern, Oktober 2007.Christian Schlegel, Siegfried Hochdorfer. “Bearing-Only SLAM with an Omnicam - An Experimental Evaluation for Service Robotics Applications”. “In 19. Fachgespräch Autonome Mobile Systeme (AMS)”, Seiten 99-106. Springer, Stuttgart, 2005.